一个奇怪的Bug

用Camera录制任意长度视频。进入Gallery,打开包含刚拍好的视频的相册,然后右上角选择展示“幻灯片”,发现,刚才的视频的Thumbnail出现倾斜,被分割成三段展示。当第一遍循环展示结束,再次展示这张图时,图片就正常了。

如图:

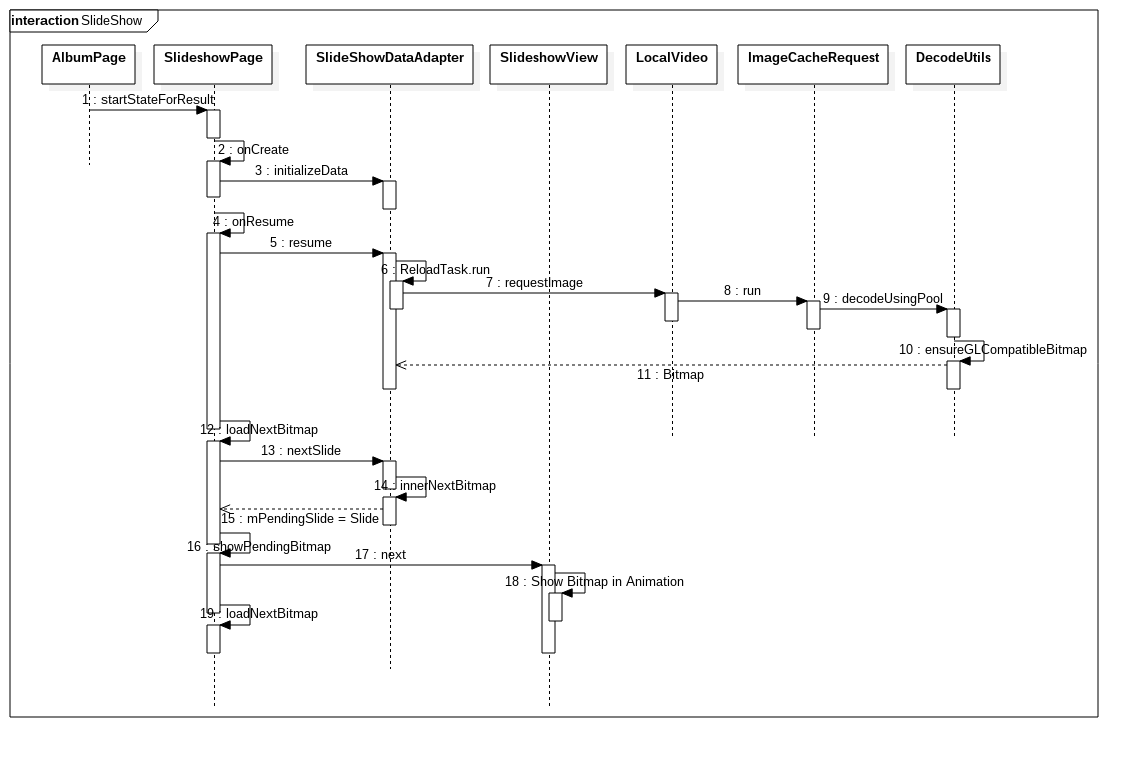

Slideshow流程

简单看一下Slideshow的流程

幻灯片展示的数据是经过SlideshowPage处理,由SlideshowView进行动画展示。展示的Bitmap来自ImageCacheRequest。图中最后的DecodeUtils是静态工具类,其中decodeUsingPool和ensureGLCompatibleBitmap对Bitmap做了处理。

从代码简单跟一遍这个流程

首先在AlbumPage或者PhotoPage界面的菜单选择Slideshow

@Override

protected boolean onItemSelected(MenuItem item) {

switch (item.getItemId()) {

case R.id.action_slideshow: {

mInCameraAndWantQuitOnPause = false;

Bundle data = new Bundle();

data.putString(SlideshowPage.KEY_SET_PATH,

mMediaSetPath.toString());

data.putBoolean(SlideshowPage.KEY_REPEAT, true);

mActivity.getStateManager().startStateForResult(

SlideshowPage.class, REQUEST_SLIDESHOW, data);

return true;

}

}

}

上面代码是从AlbumPage里的代码,看到传入的data有两个内容:相册path和数据可以重复repeat。

然后通过StateManager启动SlideshowPage。这里没如果不了解Gallery代码可能会奇怪这个StateManager是什么。这里简单介绍一下:AlbumPage和SlideshowPage都是继承自ActivityState这个类。这个ActivityState是Gallery自创的一个状态管理类,类似于Activity。StateManager通过一个栈管理着这些Page的声明周期。后面会单独介绍这个东西。

启动SlideshowPage后,就会按照onCreate,onResume的顺序初始化这个类。在onResume的时候进行调用loadNextBitmap()方法加载下一张要显示的幻灯片,然后这个方法会给handler发信息,循环调用showPendingBitmap()->loadNextBitmap()来实现幻灯片循环播放。

mHandler = new SynchronizedHandler(mActivity.getGLRoot()) {

@Override

public void handleMessage(Message message) {

switch (message.what) {

case MSG_SHOW_PENDING_BITMAP:

showPendingBitmap();

break;

case MSG_LOAD_NEXT_BITMAP:

loadNextBitmap();

break;

default: throw new AssertionError();

}

}

};

在onResume的时候它还做了一件重要的事,初始化SlideshowDataAdapter并且调用其resume方法。

mModel.resume();

看一下这个resume做了什么:

public class SlideshowDataAdapter implements SlideshowPage.Model {

@Override

public synchronized void resume() {

mIsActive = true;

mSource.addContentListener(mSourceListener);

mNeedReload.set(true);

mDataReady = true;

mReloadTask = mThreadPool.submit(new ReloadTask());

}

}

主要是开启异步加载图片的ReloadTask。

private class ReloadTask implements Job {

@Override

public Void run(JobContext jc) {

while (true) {

synchronized (SlideshowDataAdapter.this) {

while (mIsActive && (!mDataReady

|| mImageQueue.size() >= IMAGE_QUEUE_CAPACITY)) {

try {

SlideshowDataAdapter.this.wait();

} catch (InterruptedException ex) {

// ignored.

}

continue;

}

}

if (!mIsActive) return null;

mNeedReset = false;

MediaItem item = loadItem();

if (mNeedReset) {

synchronized (SlideshowDataAdapter.this) {

mImageQueue.clear();

mLoadIndex = mNextOutput;

}

continue;

}

if (item == null) {

synchronized (SlideshowDataAdapter.this) {

if (!mNeedReload.get()) mDataReady = false;

SlideshowDataAdapter.this.notifyAll();

}

continue;

}

Bitmap bitmap = item

.requestImage(MediaItem.TYPE_THUMBNAIL)

.run(jc);

if (bitmap != null) {

synchronized (SlideshowDataAdapter.this) {

mImageQueue.addLast(

new Slide(item, mLoadIndex, bitmap));

if (mImageQueue.size() == 1) {

SlideshowDataAdapter.this.notifyAll();

}

}

}

++mLoadIndex;

}

}

}

这个Task里面,异步获取的Bitmap全部保存在mImageQueue里面。

而Bitmap的获取又是一个任务Job。这个Job是MediaItem定义的一个抽象方法,LocalVideo作为MediaItem的一个子类实现了这个方法:(这里因为是Video出现的bug,所以就直接找video流程)

@Override

public Job requestImage(int type) {

return new LocalVideoRequest(mApplication, getPath(), dateModifiedInSec,

type, filePath);

}

LocalVideoRequest是LocalView的一个内部类

public static class LocalVideoRequest extends ImageCacheRequest {

private String mLocalFilePath;

LocalVideoRequest(GalleryApp application, Path path, long timeModified,

int type, String localFilePath) {

super(application, path, timeModified, type,

MediaItem.getTargetSize(type));

mLocalFilePath = localFilePath;

}

@Override

public Bitmap onDecodeOriginal(JobContext jc, int type) {

Bitmap bitmap = BitmapUtils.createVideoThumbnail(mLocalFilePath);

if (bitmap == null || jc.isCancelled()) return null;

return bitmap;

}

}

然后从super进入ImageCacheRequest

abstract class ImageCacheRequest implements Job {

@Override

public Bitmap run(JobContext jc) {

ImageCacheService cacheService = mApplication.getImageCacheService();

BytesBuffer buffer = MediaItem.getBytesBufferPool().get();

try {

boolean found = cacheService.getImageData(mPath, mTimeModified, mType, buffer);

if (jc.isCancelled()) return null;

if (found) {

BitmapFactory.Options options = new BitmapFactory.Options();

options.inPreferredConfig = Bitmap.Config.ARGB_8888;

Bitmap bitmap;

if (mType == MediaItem.TYPE_MICROTHUMBNAIL) {

bitmap = DecodeUtils.decodeUsingPool(jc,

buffer.data, buffer.offset, buffer.length, options);

} else {

bitmap = DecodeUtils.decodeUsingPool(jc,

buffer.data, buffer.offset, buffer.length, options);

}

if (bitmap == null && !jc.isCancelled()) {

Log.w(TAG, "decode cached failed " + debugTag());

}

return bitmap;

}

} finally {

MediaItem.getBytesBufferPool().recycle(buffer);

}

Bitmap bitmap = onDecodeOriginal(jc, mType);

if (jc.isCancelled()) return null;

if (bitmap == null) {

return null;

}

if (mType == MediaItem.TYPE_MICROTHUMBNAIL) {

bitmap = BitmapUtils.resizeAndCropCenter(bitmap, mTargetSize, true);

} else {

bitmap = BitmapUtils.resizeDownBySideLength(bitmap, mTargetSize, true);

}

if (jc.isCancelled()) return null;

byte[] array = BitmapUtils.compressToBytes(bitmap);

if (jc.isCancelled()) return null;

cacheService.putImageData(mPath, mTimeModified, mType, array);

return bitmap;

}

}

这里就从不同的地方对Bitmap进行处理。然后返回Bitmap,最后经过next()方法展示幻灯片:

public class SlideshowView extends GLView {

...

public void next(Bitmap bitmap, int rotation) {

mTransitionAnimation.start();

if (mPrevTexture != null) {

mPrevTexture.getBitmap().recycle();

mPrevTexture.recycle();

}

mPrevTexture = mCurrentTexture;

mPrevAnimation = mCurrentAnimation;

mPrevRotation = mCurrentRotation;

mCurrentRotation = rotation;

mCurrentTexture = new BitmapTexture(bitmap);

if (((rotation / 90) & 0x01) == 0) {

mCurrentAnimation = new SlideshowAnimation(

mCurrentTexture.getWidth(), mCurrentTexture.getHeight(),

mRandom);

} else {

mCurrentAnimation = new SlideshowAnimation(

mCurrentTexture.getHeight(), mCurrentTexture.getWidth(),

mRandom);

}

mCurrentAnimation.start();

invalidate();

}

@Override

protected void render(GLCanvas canvas) {

long animTime = AnimationTime.get();

boolean requestRender = mTransitionAnimation.calculate(animTime);

float alpha = mPrevTexture == null ? 1f : mTransitionAnimation.get();

if (mPrevTexture != null && alpha != 1f) {

requestRender |= mPrevAnimation.calculate(animTime);

canvas.save(GLCanvas.SAVE_FLAG_ALPHA | GLCanvas.SAVE_FLAG_MATRIX);

canvas.setAlpha(1f - alpha);

mPrevAnimation.apply(canvas);

canvas.rotate(mPrevRotation, 0, 0, 1);

mPrevTexture.draw(canvas, -mPrevTexture.getWidth() / 2,

-mPrevTexture.getHeight() / 2);

canvas.restore();

}

if (mCurrentTexture != null) {

requestRender |= mCurrentAnimation.calculate(animTime);

canvas.save(GLCanvas.SAVE_FLAG_ALPHA | GLCanvas.SAVE_FLAG_MATRIX);

canvas.setAlpha(alpha);

mCurrentAnimation.apply(canvas);

canvas.rotate(mCurrentRotation, 0, 0, 1);

mCurrentTexture.draw(canvas, -mCurrentTexture.getWidth() / 2,

-mCurrentTexture.getHeight() / 2);

canvas.restore();

}

if (requestRender) invalidate();

}

}

Bug的判断处理

关于开始的问题,首先要调查的就是展示的Bitmap是否是正常的。

通过把Bitmap保存到本地文件,发现图片是正常的。再尝试读这个文件到Bitmap展示,发现没有再出现图像分割的现象了。

所以这个问题有一个初步的解决办法:把原始Bitmap压缩到字节流去,然后再从字节流读到Bitmap对象:

ByteArrayOutputStream fOut = new ByteArrayOutputStream();

bitmap.compress(Bitmap.CompressFormat.JPEG, 100, fOut);

byte[] bitmapData = fOut.toByteArray();

bitmap = BitmapFactory.decodeByteArray(bitmapData, 0, bitmapData.length);

然后继续跟代码,发现,在最开始的图片加载是在ImageCacheRequest中的run()方法,见下方注释。

@Override

public Bitmap run(JobContext jc) {

ImageCacheService cacheService = mApplication.getImageCacheService();

BytesBuffer buffer = MediaItem.getBytesBufferPool().get();

try {

//一开始这里的found是false,会跳出这里

boolean found = cacheService.getImageData(mPath, mTimeModified, mType, buffer);

if (jc.isCancelled()) return null;

if (found) {

BitmapFactory.Options options = new BitmapFactory.Options();

options.inPreferredConfig = Bitmap.Config.ARGB_8888;

Bitmap bitmap;

if (mType == MediaItem.TYPE_MICROTHUMBNAIL) {

bitmap = DecodeUtils.decodeUsingPool(jc,

buffer.data, buffer.offset, buffer.length, options);

} else {

// 第二次走到这里

bitmap = DecodeUtils.decodeUsingPool(jc,

buffer.data, buffer.offset, buffer.length, options);

}

if (bitmap == null && !jc.isCancelled()) {

Log.w(TAG, "decode cached failed " + debugTag());

}

return bitmap;

}

} finally {

MediaItem.getBytesBufferPool().recycle(buffer);

}

//最开始的Bitmap来自这里

Bitmap bitmap = onDecodeOriginal(jc, mType);

if (jc.isCancelled()) return null;

if (bitmap == null) {

return null;

}

if (mType == MediaItem.TYPE_MICROTHUMBNAIL) {

bitmap = BitmapUtils.resizeAndCropCenter(bitmap, mTargetSize, true);

} else {

//然后进行resize处理

bitmap = BitmapUtils.resizeDownBySideLength(bitmap, mTargetSize, true);

}

if (jc.isCancelled()) return null;

byte[] array = BitmapUtils.compressToBytes(bitmap);

if (jc.isCancelled()) return null;

cacheService.putImageData(mPath, mTimeModified, mType, array);

return bitmap;

}

先看一下onDecodeOriginal()方法:

在LocalVideo中

@Override

public Bitmap onDecodeOriginal(JobContext jc, int type) {

Bitmap bitmap = BitmapUtils.createVideoThumbnail(mLocalFilePath);

if (bitmap == null || jc.isCancelled()) return null;

return bitmap;

}

public static Bitmap createVideoThumbnail(String filePath) {

// MediaMetadataRetriever is available on API Level 8

// but is hidden until API Level 10

Class clazz = null;

Object instance = null;

try {

clazz = Class.forName("android.media.MediaMetadataRetriever");

instance = clazz.newInstance();

Method method = clazz.getMethod("setDataSource", String.class);

method.invoke(instance, filePath);

// The method name changes between API Level 9 and 10.

if (Build.VERSION.SDK_INT <= 9) {

return (Bitmap) clazz.getMethod("captureFrame").invoke(instance);

} else {

byte[] data = (byte[]) clazz.getMethod("getEmbeddedPicture").invoke(instance);

if (data != null) {

Bitmap bitmap = BitmapFactory.decodeByteArray(data, 0, data.length);

if (bitmap != null) return bitmap;

}

return (Bitmap) clazz.getMethod("getFrameAtTime").invoke(instance);

}

} catch (IllegalArgumentException ex) {

// Assume this is a corrupt video file

} catch (RuntimeException ex) {

// Assume this is a corrupt video file.

} catch (InstantiationException e) {

Log.e(TAG, "createVideoThumbnail", e);

} catch (InvocationTargetException e) {

Log.e(TAG, "createVideoThumbnail", e);

} catch (ClassNotFoundException e) {

Log.e(TAG, "createVideoThumbnail", e);

} catch (NoSuchMethodException e) {

Log.e(TAG, "createVideoThumbnail", e);

} catch (IllegalAccessException e) {

Log.e(TAG, "createVideoThumbnail", e);

} finally {

try {

if (instance != null) {

clazz.getMethod("release").invoke(instance);

}

} catch (Exception ignored) {

}

}

return null;

}

这里用反射拿到Video的缩略图。

然后对图片缩放

public static Bitmap resizeDownBySideLength(

Bitmap bitmap, int maxLength, boolean recycle) {

int srcWidth = bitmap.getWidth();

int srcHeight = bitmap.getHeight();

float scale = Math.min(

(float) maxLength / srcWidth, (float) maxLength / srcHeight);

if (scale >= 1.0f) return bitmap;

return resizeBitmapByScale(bitmap, scale, recycle);

}

public static Bitmap resizeBitmapByScale(

Bitmap bitmap, float scale, boolean recycle) {

int width = Math.round(bitmap.getWidth() * scale);

int height = Math.round(bitmap.getHeight() * scale);

if (width == bitmap.getWidth()

&& height == bitmap.getHeight()) return bitmap;

//实际走到这里

Bitmap target = Bitmap.createBitmap(width, height, getConfig(bitmap));

Canvas canvas = new Canvas(target);

canvas.scale(scale, scale);

Paint paint = new Paint(Paint.FILTER_BITMAP_FLAG | Paint.DITHER_FLAG);

canvas.drawBitmap(bitmap, 0, 0, paint);

if (recycle) bitmap.recycle();

return target;

}

但根据这一步的代码,并没能找到明确的原因。

但是,通过流程代码,发现一个更好的修改方法,就是在ImageCacheRequest中:

//最开始的Bitmap来自这里

Bitmap bitmap = onDecodeOriginal(jc, mType);

if (jc.isCancelled()) return null;

if (bitmap == null) {

return null;

}

if (mType == MediaItem.TYPE_MICROTHUMBNAIL) {

bitmap = BitmapUtils.resizeAndCropCenter(bitmap, mTargetSize, true);

} else {

//然后进行resize处理

bitmap = BitmapUtils.resizeDownBySideLength(bitmap, mTargetSize, true);

}

if (jc.isCancelled()) return null;

byte[] array = BitmapUtils.compressToBytes(bitmap);

if (jc.isCancelled()) return null;

cacheService.putImageData(mPath, mTimeModified, mType, array);

return bitmap;

出问题的Bitmap是经过resizeDownBySideLength处理的,然后做了一步转换成字节流的步骤。所以还是使用前面的修改策略,对这个字节流还原成Bitmap返回。

if (jc.isCancelled()) return null;

byte[] array = BitmapUtils.compressToBytes(bitmap);

//只需要这一行代码

bitmap = BitmapFactory.decodeByteArray(array, 0, array.length);

if (jc.isCancelled()) return null;

这样修改就不会影响其他流程,只处理第一次获取缩略图的过程。

最后,

根据现象,由于显示的Bitmap内容是完整的,只是数据错乱了。然后保存到字节流再取出来将数据顺序重新排列了。所以下一步就是探索Bitmap源码寻找真正的原因。

TBC

![[MachineLearning]tesseract使用](/medias/featureimages/14.jpg)

![[Android][Framework] 从一个小问题了解STK加载内容的方式](/medias/featureimages/4.jpg)